UK: The FCA, Bank of England and PRA issue their strategic approach to regulating AI in response to the government’s AI White Paper

The UK’s financial regulators have responded to the government’s White Paper on its approach to regulating the use of artificial intelligence and machine learning (AI). Publications from the Financial Conduct Authority (FCA), the Bank of England and the Prudential Regulation Authority (PRA), each dated 22 April 2024, signal a principles-based approach for the UK financial sector in relation to AI and indicate that regulated entities are unlikely to be bound by prescriptive rules specifically governing the use of AI, at least for the foreseeable future.

Background

The UK government launched a White Paper on its intended approach to regulating AI on 29 March 2023 which it said would ‘turbocharge growth’ by driving responsible innovation and maintaining public trust. Following a consultation period, the government issued its response on 6 February 2024 in which it made clear that it would not rush to legislate or risk implementing ‘quick-fix’ rules that would quickly become outdated or ineffective given the rapid pace of development in this area. Instead, the government stated its intention to empower existing regulators to address AI risks in a targeted way and tailored to the needs of each sector.

The government’s approach to ensuring responsible AI use is centred around five cross-sectoral principles:

- Safety, security and robustness

- Appropriate transparency and explainability

- Fairness

- Accountability and governance

- Contestability and redress

As part of its consultation response, the government called upon regulators in key sectors to publish updates by the end of April 2024 outlining their strategic approach to AI, including an explanation of their current capability to address AI and the actions they are taking to ensure they have the right structures and skills in place.

On 22 April 2024, the financial regulators responded with the following publications:

- the FCA published an AI Update (the “FCA Update”) in response to the government’s White Paper and its further paper Implementing the UK’s AI regulatory principles: initial guidance for regulators; and

- the Bank of England and the Prudential Regulation Authority (PRA) published a letter addressed to the Secretary of State for Science, Innovation and Technology, Michelle Donelan MP and the Economic Secretary to the Treasury and City Minister, Bim Afolami MP, setting out an update on its approach to AI (the “BoE/PRA Update”).

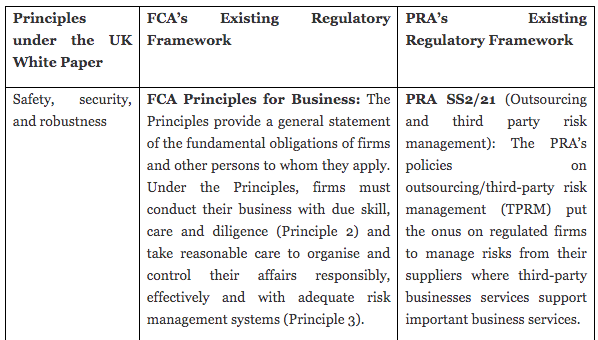

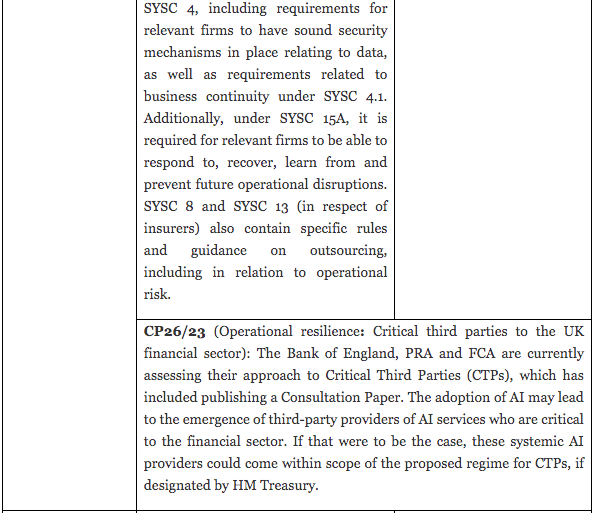

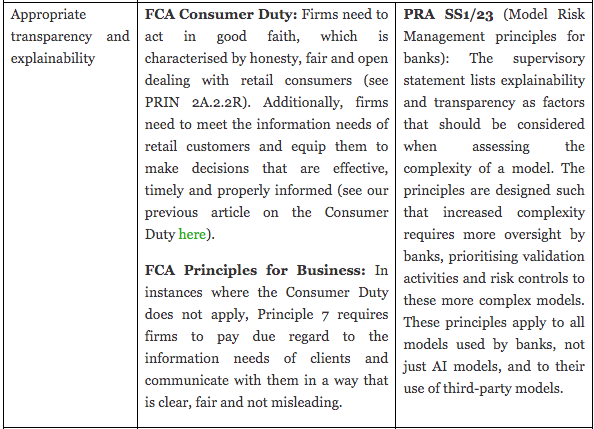

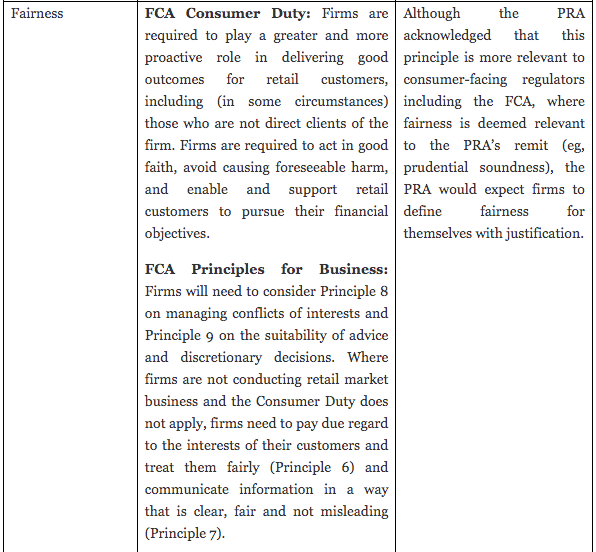

Alignment with the government’s approach and no new regulation expected

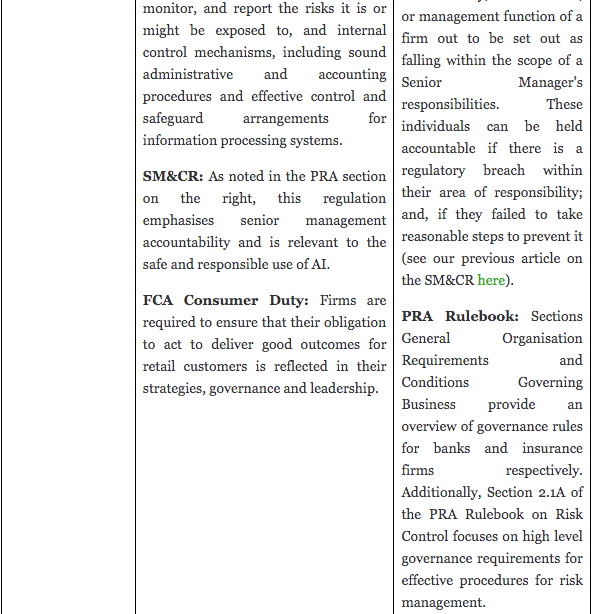

Both the FCA and BoE/PRA Updates indicate that they welcome the government’s principles-based, technology-agnostic and sector-led approach to regulating AI. In particular, they note that the cross-sectoral principles are consistent with their regulatory approach, and have mapped out the ways in which their respective regulatory frameworks could meet or address those principles (as summarised in the table below). They take the view that the existing FCA and PRA regulatory frameworks are appropriate to support AI innovation in ways that will benefit the industry and the wider economy whilst also addressing the risks, in line with the five principles set out in the White Paper.

The FCA has, however, noted that its regulatory approach will have to adapt to the speed, scale and complexity of AI and that greater focus on the testing, validation and understanding of AI models may be needed, as well as strong accountability principles.

The PRA has also noted that the continued adoption of AI in financial services could have potential financial stability implications and it will undertake deeper analysis on this during the course of 2024, the findings of which will be considered by the Financial Policy Committee (FPC) of the Bank of England.

The table below summarises the ways in which the FCA and PRA’s existing regulatory frameworks are said to address the UK government’s principles.

*Data protection laws are not covered in the table as the Information Commissioner’s Office (ICO) will remain responsible for enforcing compliance in this regard.

Investments to support innovation

The PRA and FCA each have, in addition to their primary objectives, a secondary objective to facilitate the international competitiveness of the UK economy and its growth in the medium to long term. Recognising this objective, the FCA and BoE/PRA Updates emphasise that the UK financial regulators are pro-innovation and make reference to some significant investments being made by the regulators to support the safe development of AI and to adopt AI technology to support their own functions. The FCA, for example, has established Regulatory and Digital Sandboxes to allow firms to test ideas in a controlled environment and is hosting a TechSprint whereby trade surveillance specialists will have access to FCA’s trading datasets to allow the development and testing of AI powered surveillance solutions. The FCA has also announced the creation of a new FCA digital hub in Leeds comprising more than 75 data scientists.

The next 12 months

Over the next 12 months, the FCA intends to focus on the following in relation to AI:

- Continuing to further its understanding of AI deployment in UK financial markets;

- Building on its existing foundations to consider regulatory adaptations if needed;

- Collaborating closely with the Bank of England, Payment Systems Regulator (PSR) and other regulators as well as regulated firms, academia, society and international regulators and organisations to build empirical understanding and intelligence around AI;

- Testing for beneficial AI including through the pilot AI and Digital Hub, the FCA’s Digital Sandbox and the Regulatory Sandbox. The FCA will continue to explore the possibility of establishing an AI Sandbox; and

- Conducting research on deepfakes and simulated content following engagement with stakeholders as part of the Digital Regulation Cooperation Forum (DRCF) Horizon Scanning and Emerging Technologies workstream in 2024-25.

The PRA has been exploring the following potential areas for clarification on its regulatory framework:

- Data management: Firms have noted the fragmented nature of the current regulatory landscape around data management in the context of AI and the PRA is considering options to address those challenges;

- Model risk management (MRM): The model risk management principles for banks will come into effect on 17 May 2024;

- Governance: In DP5/22, the Bank of England and the PRA sought feedback on whether there should be a dedicated Senior Management Function (SMF) for AI under the Senior Managers and Certification Regime (SM&CR). Respondents expressed the view that existing firm governance structures are sufficient to address AI risks including firms having to identify the relevant SMF(s) to assume overall responsibility for a firm’s MRM framework. It also requires firms to provide a model inventory, which includes AI models; and

- Operational resilience and third-party risks: The Bank of England, PRA and FCA are currently assessing their approach to critical third parties (CTPs) in the financial sector following CP23/30 which was published in December 2023.

The PRA will also continue to engage directly with stakeholders. This may include establishing a new AI Consortium as a follow-up industry consortium to the Artificial Intelligence Public-Private Forum (AIPPF) which published its final report in February 2022.

Impact on businesses

Many businesses that operate in the financial sector – including financial services firms and technology providers – will welcome the principles-based approach favoured by the regulators and will be happy that they will not need to prepare for a prescriptive new suite of regulations in relation to IT systems that rely on AI components. Some businesses, on the other hand, are already preparing for implementation of the EU Artificial Intelligence Act and will be faced with decisions as to how their AI governance arrangements should apply in the context of their UK operations. Nevertheless, having some clarity from the regulators will be helpful at a time when businesses are looking to rapidly adopt new AI capabilities as they become available.

In our experience, and as can be seen from the above there is no shortage of regulation and legislation that would apply to AI. However, the challenge for the financial services industry is that it not always clear exactly how the regulators expect the regulation to apply in the context of AI in practical operational terms. This is exacerbated when firms try to negotiate with their vendors providing AI systems who may take a less conservative view of how a particular regulation should apply to the AI systems provided. The industry would therefore welcome some further guidance and perhaps some clear practical examples would be of help.

Strategic guidance from other UK regulators such as the Information Commissioner’s Office (ICO) and the Competition and Markets Authority (CMA) is expected and is also likely to have an impact on financial institutions. It is also a real possibility that a change in government following the upcoming general election at the end of this year could have a material impact on the direction of travel for AI regulation.