At a dinner with the American ambassador in 2007, Li Keqiang, future premier of China, said that when he wanted to know what was happening to the country’s economy, he looked at the numbers for electricity use, rail cargo and bank lending. There was no point using the official GDP statistics, Li said, because they are ‘man-made’. That remark, which we know about thanks to WikiLeaks, is fascinating for two reasons. First, because it shows a sly, subtle, worldly humour – a rare glimpse of the sort of thing Chinese Communist Party leaders say in private. Second, because it’s true. A whole strand in contemporary thinking about the production of knowledge is summed up there: data and statistics, all of them, are man-made.

They are also central to modern politics and governance, and the ways we talk about them. That in itself represents a shift. Discussions that were once about values and beliefs – about what a society wants to see when it looks at itself in the mirror – have increasingly turned to arguments about numbers, data, statistics. It is a quirk of history that the politician who introduced this style of debate wasn’t Harold Wilson, the only prime minister to have had extensive training in statistics, but Margaret Thatcher, who thought in terms of values but argued in terms of numbers. Even debates that are ultimately about national identity, such as the referendums about Scottish independence and EU membership, now turn on numbers.

Given the ubiquity of this style of argument, we are nowhere near as attentive to its misuses as we should be. As the House of Commons Treasury Committee said dryly in a 2016 report on the economic debate about EU membership, ‘many of these claims sound factual because they use numbers.’ The best short book about the use and misuse of statistics is Darrell Huff’s How to Lie with Statistics, first published in 1954, a devil’s-advocate guide to the multiple ways in which numbers are misused in advertising, commerce and politics. (Single best tip: ‘up to’ is always a fib. It means somebody did a range of tests and has artfully chosen the most flattering number.) For all its virtues, though, even Huff’s book doesn’t encompass the full range of possibilities for statistical deception. In politics, the numbers in question aren’t just man-made but are often contentious, tendentious or outright fake.

Two fake numbers have been decisively influential in British politics over the baleful last thirteen years. The first was an outright lie: Vote Leave’s assertion that £350 million a week extra ‘for the NHS’ would be available if we left the EU. The real number for the UK’s net contribution to the EU was £110 million, but that didn’t matter, since the crucial thing for the Leave campaign was to make the number the focus of debate. The Treasury Committee said the number was fake, and so did the UK Statistics Authority. This had no, or perhaps even a negative, effect. In politics it doesn’t really matter what the numbers are, so much as whose they are. If people are arguing about your numbers, you’re winning.

The other incorrect statistic to have done damage in Britain in the last decade wasn’t so much a lie as a mistake. During the austerity regime of the 2010s, as Georgina Sturge points out in the bracing Bad Data, when the chancellor, George Osborne, was giving reasons for the necessity of ‘austerity’ – itself a misleading quasi-moral term for reductions in government spending that disproportionately affect the poor – he relied heavily on recent findings in economics. The Harvard economists Carmen Reinhart and Kenneth Rogoff had ‘shown’, via a model built using 44 countries over two centuries, that when a government’s ratio of debt to GDP went over 90 per cent, the economy shrank. Osborne drew directly on this research. ‘The latest research suggests,’ he said, ‘that once debt reaches more than about 90 per cent of GDP the risks of a large negative impact on long-term growth become highly significant.’

This was disingenuous, as I wrote at the time: many Tories go into politics specifically to shrink the state. The aftermath of the credit crunch for them was an inflection point, an ideal opportunity to exploit a political moment and change direction in government spending. It was left to a graduate student at Amherst, Thomas Herndon, to check the relevant numbers, as a homework assignment. He tried to replicate the findings in the Reinhart and Rogoff paper, but couldn’t, so he asked them for their original data, which they (admirably) sent. Herndon checked the numbers and found the kind of mistake for which in other contexts people lose their jobs: the academics had intended to sum twenty rows of data but had only used fifteen. Australia, Austria, Belgium, Canada and Denmark had been left out. When they were included, countries with debt to GDP over 90 per cent were no longer shrinking. ‘By that point the 90 per cent threshold was a cornerstone of economic policy,’ Sturge writes. ‘Cuts had already been planned and the idea of necessary “austerity” was something the UK government simply couldn’t back down on.’

It is unfair to an entire discipline, though, to pick on spectacular failures and misuses as if they are characteristic. At their heart, statistics exist to do what their etymology suggests: help states to understand themselves. In the short term, the word comes from the German: statistik, of or pertaining to the state or government. Go back far enough, and it comes from the Proto-Indo-European root ‘sta-’. That has a huge range of meanings linked to the idea of being set down, being firm, standing: it is present everywhere from ‘cost’ to ‘understand’, via ‘destitute’, ‘epistemology’, ‘metastatis’ and ‘system’. All of those cognates are relevant in Arunabh Ghosh’s dry but suggestive book Making It Count, which is about, as the subtitle has it, ‘Statistics and Statecraft in the Early People’s Republic of China’.

In 1949, when the Communist Party found itself in charge of China after winning the civil war, it had one of the most basic problems imaginable: it didn’t know how many people it had in its charge or where they lived. The collection of modern statistics, which had begun with the British-run Chinese Maritime Customs Service, failed under the pressure of ‘the collapse of the Qing empire in 1911 … warlordism, a Japanese invasion, a world war and a debilitating civil war’. It wasn’t possible to conduct a census. The project of bringing about a socialist state in China ‘hinged, to a large degree, on being able to resolve this crisis of counting’. And, as Lenin wrote, ‘socialism is first of all a matter of accounting.’

Ghosh identifies three main strands in his story, and they are all relevant to the use of data and statistics in other contexts. The first is the anthropological, investigative, ethnographical approach which in the first instance, perhaps surprisingly, was the one practised by Mao himself. His 1927 ‘Report on an Investigation of the Peasant Movement in Hunan’ relied on direct questioning of the situation in his home province. ‘I met all sorts of people and picked up a good deal of gossip.’ His text advocated for the revolutionary potential of the peasantry, a big and important change in direction for Chinese Marxism, but it also showed a path which government and professional statistics have, by and large, tended to ignore. Qualitative, direct and personal investigation is out of fashion; it has, indeed, become in a sense the opposite of the way governments do statistics. Corporations are starting to do things differently, and some employ anthropologists to increase their understanding of the way customers act and think. Intel, Microsoft and Walt Disney – to cite three examples of companies that have in-house anthropologists – use insights of this sort, but Western politicians never do.

The second strand of statistical practice picked out by Ghosh is the simplest. It involves counting everything. He calls this the exhaustive method, and it is most clearly seen in the practice of the census. The principle of the census is easy to understand, and its utility is easy to understand too – in fact, once you think about it, it’s difficult to imagine how a country can govern itself without knowing the basic data concerning how many people it contains, how old they are and where they live. (Reality often gets in the way. Especially civil wars. The winner in the no-census stakes is Lebanon, which hasn’t had one since 1932.) The actual business of performing a census is not straightforward: the first release of data from the last UK census, from 21 March 2021, took fifteen months; the full release isn’t complete and the total cost of the exercise is around £1 billion. Still, although the reality is complicated and expensive, the idea is not: when in doubt, count everything.

This is not an ideologically neutral process. Dan Bouk’s history of the 1940 US census, Democracy’s Data, makes that point very thoroughly. The census is a bedrock of democracy in the US; it is mandated in the first article of the US constitution. A census is supposed to be the purest form of enumeration, an exercise in counting and nothing else. It is the bare brick of numbers – at least, that’s the idea. This means that any markings or patterns in the brick stand out. If you’re trying to stick to pure facts, one of the things you’re revealing is what you consider to be a fact. To quote that Treasury Committee report again, ‘a recurring complaint in the debate on the European Union is the absence of “facts”.’ You have to love those inverted commas.

In the case of the US census, what stands out most sharply as a ‘fact’ is race. During slavery, the enslaved were counted in the census as three-fifths of a person, for the purpose of allocating seats in Congress, but weren’t named, other than as property of a named owner. The census treated free African Americans in the same way as everyone else, with the ‘head of household’ the only person named until the 1850 census, which was the first to name every individual citizen – though the enslaved were still only enumerated. The dry records of name and number give the faintest hints of the dramatic individual changes in circumstance they record. Frederick Douglass appears in the 1830 census as a number: he is probably the ‘1’ indicating a male slave aged between ten and 24 living in the Baltimore household headed by Hugh Auld. (He wouldn’t have called himself Douglass then: his mother gave him the surname Bailey; he took the surname Stanley when he escaped from Baltimore; he became Johnson in New York and married under that name; he changed it to Douglass only later.) In the 1840 census Frederick Douglass is listed as a head of household in New Bedford, Massachusetts. His wife and daughter appear as numbers.

The charged status of race in the census did not end there. Contemporary arguments about racial ‘degeneration’ and the changing composition of the US population fed directly into the census of 1920, in which almost half the form was devoted to questions about where people were born and what their parents’ mother tongue was. ‘In the ensuing decade, nativist voices in Congress used that data to draw attention to the growing population of foreign-born Americans who hailed from “undesirable races” – especially Italians, Eastern Europeans and Russian Jews – and to frame that population as a problem.’ Racial degeneration had long been a theme in the census: a tabulation error in the 1840 census had led to the ‘insane and idiots’ being recorded in columns used to count the number of free Black citizens. Pro-slavery advocates leaped on this as evidence that Black people were constitutionally incapable of coping with freedom. ‘A missing word within an overly elaborate form appears to have been at the root of the misleading evidence pointing to an epidemic of idiocy and insanity in free Black communities, an epidemic that never existed,’ Bouk writes. Racial exploitation of distorted census data was a theme for decades. In 1896, Frederick Hoffman published a 330-page pseudo-scientific study, Race Traits and Tendencies of the American Negro, with a view to proving a racial tendency to degeneracy and criminality. That work was published in the American Economic Association’s Journal. Hoffman relied on census data that had been systematically undercounting Black Americans, and used it to predict the ‘eventual extinction of the Black race in the United States’.

As Benedict Anderson once wrote, ‘the fiction of the census is that everyone is in it, and that everyone has one – and one only – extremely clear place.’ Even the purest form of exhaustive data contains biases, distortions and lacunae: it is, in a sense, a work of fiction. That doesn’t render the exhaustive method invalid, but it does mean that it always needs to be questioned and contextualised. There is room for another approach: one that makes room for analytical, selective techniques (Ghosh calls this the stochastic approach). These approaches need to bear in mind what T.S. Eliot said about the best method of literary criticism: ‘There is no method but to be very intelligent.’ There’s what you can count, and what sense you can make of what you count, and the lessons to be learned from that are not always what they seem. In a previous life, I used to write an arts column for the Daily Telegraph. In that capacity I once had lunch with the editor, Charles Moore, who said something I’ve never forgotten. He was talking about market research, and when I said something sensitive and thoughtful about it being mostly bollocks, he replied: ‘No, it can be very helpful, but the thing you have to remember is that it is Delphic. It answers the question you ask, but it doesn’t tell you what to do.’

Call it the Jaap Stam conundrum. The story is told in Rory Smith’s entertaining book about the use of data in football, Expected Goals. Stam was a very talented but ageing central defender who had for three years been crucial to the success of Alex Ferguson’s Manchester United. Stam got into Ferguson’s bad books, and Ferguson decided to get rid of him, backed by data showing that Stam was now making fewer tackles. Stam was sold to Lazio for £16.5 million. Simon Kuper, co-author of Soccernomics (2009), about the use of numbers in football, wrote that this might have been the first deal in football based partly on data. It was, as Ferguson later admitted, a ‘bad decision’. Stam went on to have several productive years at the top of Italian football. The mistake was based on the fact that the data were Delphic. The question Man U wanted to have answered was ‘Should we keep Stam?’ Instead, the oracle answered the question ‘How many tackles is Stam making?’ But, as Smith writes,

the best defenders do not need to make many tackles. The tackle itself is an act of last resort; great defenders intervene long before anything so tawdry is required. That Stam was making fewer tackles was not a sign that he was getting worse; if anything, it may have been a sign that his anticipation and his positioning and his reading of the game were all improving.

Football, like Mao’s China, has discovered that getting hold of the data is one thing, interpretation of the data is another. The terms ‘data’ and ‘analytics’ are used interchangeably in sport, Smith points out, but in fact the gathering of data, fuelled by surging interest from sports teams and professional gamblers, has tended significantly to outpace the intelligent analytic use of that data. Perhaps that isn’t surprising, given the sheer amount of data generated by modern football: a peak of 575 data points a second, three million data points from a single match. The data is collated by people in places where labour of that type is inexpensive, such as the Philippines and Laos. Most of the work is the repetitive task of tagging each and every moment of action and movement in a game. Smith describes the work of Fran Taylor, who ran a data operation for a company called StatDNA. Taylor supervised the operation of the business by day and played in the Lao Premier League in the evenings. The company’s business advantage was to use the extra cheap labour to tag the games in extra thorough detail: ‘the result would be data that was cleaner than anything else on the market.’ The upshot: StatDNA was bought by Arsenal.

As for what teams do with the data, well, the short answer is that we don’t really know. Teams spend a lot of money on analytics, and they don’t do so in order to give away the competitive advantages of their approach. You can see the effects, though. Two relative newcomers to the Premier League, Brentford and Brighton, are both owned by professional gamblers who made their fortunes betting on football. Tony Bloom of Starlizard (b. 1970, Lancing, maths at Manchester, professional poker) and Matthew Benham of Smartodds (b. 1968, Slough Grammar, physics at Oxford, derivatives trading) are former colleagues at Premier Bet who haven’t spoken since 2004. The way they use the data they collect is a secret. But you sometimes get a glimpse of how effective the data can be, in financial as well as football terms. Brighton bought the Ecuadorian midfielder Moisés Caicedo from Independiente del Valle in Ecuador for £4.5 million in 2021, and have just sold him to Chelsea for the all-time UK record fee of £115 million. What that tells us is that two years before it became obvious what Caicedo could do, Brighton saw something in his numbers which told them how good he could be. Whatever it was, they ain’t telling. Brighton FC have learned the lessons of Delphi and Jaap Stam.

Georgina Sturge is a statistician at the House of Commons Library: her job is to provide politicians with answers to their statistical questions. ‘What we see happening in football analytics is just like what’s happening in public policy,’ she argues. If that sounds reassuring, it shouldn’t. Bad Data is the story of how often things go wrong in the political use of statistics. The potential for government to gather colossal, football-style quantities of data is higher than it has ever been, but for good governance to come out of that, the state has to do a better job of understanding itself. There seems to be a special gremlin in the UK which ensures that whatever the most salient political issue at a given moment, the lower the quality of the relevant (or, all too often, irrelevant) statistics.

During the 1980s, for example, the most important political issue in the UK was unemployment. As a result, the government repeatedly changed both the definition of unemployment and the technique for registering and counting the out-of-work. These categories are not fixed: until 1971 the only record of unemployment was the claimant count plus those claiming insurance from their union; the definition changed to anyone ‘actively seeking work’ in order to align with International Labour Organisation norms. Currently, there are broader measures that try to give a figure for labour market participation, a number that tracks not only people looking for work but the wider pool of people who, for whatever reason, aren’t. In addition to the shifts in what was being measured, Thatcher’s Conservative government changed the way unemployment was measured more than thirty times. Men over sixty who were claiming unemployment benefit were told they could retire (removing 162,000 men from the figures). Local offices were told to use claimant counts rather than registrations (removing 190,000 from the figures). Rules around National Insurance were changed (removing 38,000 from the figures). And so on. The Office for National Statistics, Sturge says, ‘counted nine “significant” changes which meant the series couldn’t be compared over time’.

Crime is another area where figures are prone to funkiness. The political salience of crime varies: when it is high on people’s minds, pressure grows for governments to ‘do something about it’, which usually involves setting targets, and then the targets become the subject of manipulation, in accordance with Goodhart’s law: ‘When a measure becomes a target, it ceases to be a good measure.’ When it comes to crime, the main tweaking concerns the police figures for recorded crime in England and Wales, a public dataset that has been running since 1857. ‘The trouble with recorded crime is that, to state the obvious, crimes do not record themselves,’ Sturge writes. There is huge scope for police forces and individual officers to adjust the numbers by deciding whether a crime has happened or not, and/or what kind of crime it is. In response to Labour targets in the 1990s, one force recorded a 27 per cent reduction in the number that had a target (‘theft from a motor vehicle’) alongside a 407 per cent increase in the number that had no target (‘vehicle interference’). Apparently this kind of thing is known as ‘cuffing’, ‘after the magician’s act of making a rabbit disappear into the cuff of their sleeve’.

The Crime Survey for England and Wales is a different beast from police recorded crime: it is a survey in which a large random sample of the population is asked about their experience of crime over the last year. (In terms of categories, police recorded crime is, or is supposed to be, exhaustive; the survey is analytic, using a sampled population to get closer to the truth of the whole.) It is much less prone to wild oscillations than the police numbers, and it shows that, over time, crime is decreasing. Decreasing pretty dramatically, in fact, from a 1995 high of 19.8 million crimes to a 2022 low of 4.7 million – a fall of more than three-quarters. Once you adjust for the 8.5 million increase in the population of England and Wales, the fall is even bigger: an 80 per cent drop. Unfortunately, nobody seems to believe that. Perhaps we’ve got so used to the crime data being massaged that we no longer trust the numbers. Or maybe something about the emotive nature of crime makes it difficult to feel it’s becoming less of a problem. On the evidence, though, we’re doing a brilliant job of making a success feel like a failure.

A central pillar of Sturge’s narrative concerns the UK state’s biggest statistical failure of recent decades: the failure to keep track of immigration. The accession of new countries to the EU in 2004 led to a surge in immigration which in turn led to that becoming the number one hot topic in British politics, leading ultimately to the 2016 Brexit vote. So the state must have been paying keen attention to immigration, right? Wrong. During all the years when this was the single most heated topic in the UK, the government didn’t have a clue how many immigrants there actually were. Its estimates – that is a euphemism for wild guesses – were based on a spectacularly useless thing called the International Passenger Survey. The IPS involved approaching people at airports and asking them who they were, where they were from, what the purpose of their trip to the UK was, and how long they were planning to stay. The survey was introduced in 1961 as a way of finding out about tourism, and accidentally turned into the state’s only measure of immigration for the next six decades. Some fun features of the survey include: until 2009 it only operated at Heathrow, Gatwick and Manchester, so missed millions of arrivals at regional airports, especially arrivals from the EU; it stopped counting at 10 p.m., so missed millions of departures, especially to Africa and Asia; surveyors were forbidden to approach unaccompanied travellers who looked under eighteen, so missed millions of departures of non-Caucasian students in particular (age is harder to estimate in a different ethnicity).

The concatenation of all these factors meant that the survey was useless – worse than useless, because it had a decisive influence on government policy. At the time of Brexit, the government estimate for the number of EU citizens in the country was around three million. When a process was brought in to formalise their presence in the UK, 7.4 million applications for settled status were received. The single most important number in the Brexit debate – and nobody knew what it was. Erring in the other direction was the figure for migrants who had overstayed their visas and illegally remained in the UK. The government used ONS estimates that around 100,000 students were overstaying. Theresa May relied heavily on these numbers in compiling her policy to make it harder for foreign students to come here, as part of her vile ‘hostile environment’. When the real number was finally put together from exit checks, in 2017, it turned out to be 4600. A significant government policy was based on a big fat mistake – which the government would have realised if it had listened to the higher education sector. That is an example of a moment when it would have been much more effective to use an anthropological, inquiry-based approach, rather than simply relying on fake data.

This might bring us to the conclusion that the UK is failing at statistics. But it is not true to say that the British state is uniquely bad at data. In fact, and despite the cock-ups outlined above, this is something of a national strength: as Sturge writes, ‘by global standards we do have very good data.’ Our governments fudge and make mistakes, but they still compare favourably with states such as China, where a majority of deaths go unrecorded, where Covid was systematically lied about, and where, when a number becomes politically difficult, it simply disappears from the published data (which is what happened to China’s highly sensitive youth unemployment figures in August). France has notorious difficulties with its ethnic minorities, compounded not only by the fact that it fails to count any data on ethnicity but that gathering any such data is illegal. This is supposed to be an anti-racist policy, but instead it turns race into a zone of official silence, a deliberate government blind spot. Ask in the banlieues how that’s working out. The Covid response in the UK failed in some respects and was patchy in others, but we had some of the best data in the world, thanks to the Covid Infection Survey, which used random sampling to analyse prevalence of the disease. This is different from counting how many people are ill, or how many turn up for medical treatment: it was a much more thorough and much more accurate measure of the illness moment to moment – 500,000 people took part in the survey, and 11 million tests were administered.

This leaves open the question of what the state does with all the things it counts. A big part of the answer is that it uses them to raise taxes, and to decide how those taxes are spent. Paul Johnson’s sharp and thorough Follow the Money is based on an idea so clear that it’s surprising nobody has thought of it before: he provides a full accounting of where the UK state gets its funding, and what it does with the money. That might sound dry, but Johnson, the head of the Institute of Fiscal Studies, has written an energetic and angry book, charged with a strong sense of frustration. That’s interesting in itself, because the IFS for many years had the reputation of being anti-left doomsayers, forever explaining that we the voters can’t have nice things because the state can’t afford them. The fact that the wrath of the balance-budget technocrats is so firmly directed at Conservative misgovernment is, in historical terms, a shift.

The big picture is as follows. The UK raises £910 billion in taxes (the number is from Follow the Money, which uses OBR data for 2022-23). The main sources of this are income tax of £248 billion, national insurance of £175 billion, VAT of £157 billion; then corporation tax, council tax, business rates, and then down the list of smaller but still significant sums from taxes on inheritance, booze, fuel and property transactions. It would be unfair to call the system chaotic, but it is far too complicated. Labour governments come off pretty lightly from Johnson’s book, which is fair enough since they haven’t been in power since 2010, but the one Labour politician who does get some stick is Gordon Brown, mainly for the over-complexities he introduced through his compulsive tinkering with tax and benefits. As it stands, the UK tax system doesn’t make much sense, even when it comes to what should be the central and most obvious pillar of the whole thing, income tax.

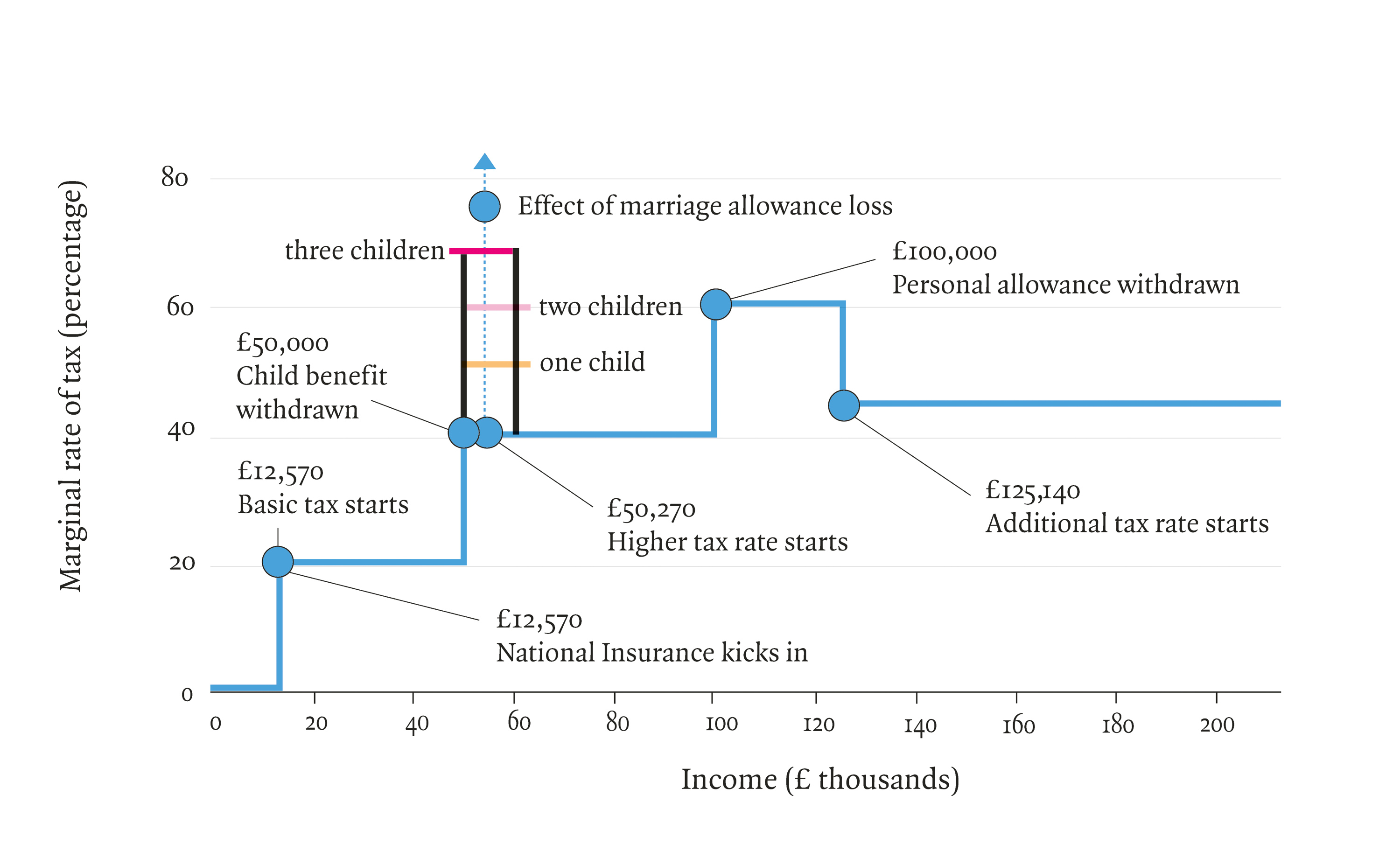

Consider the simple question: what’s the highest rate of income tax? Most people with enough interest in the subject (or enough money) to follow the topic will say 45 per cent, and some of them will know the threshold at which that rate kicks in: £125,140. But that’s not right. The highest rate of tax paid in the UK is 68 per cent, and it’s paid by single earners with three or more children, on income between £50,000 and £60,000. The next highest rate is 62 per cent, which is paid on income between £100,000 and £125,140. Earn more than that, and your tax rate drops: a banker on £500,000 is paying a lower rate of tax than a junior colleague on £100,000. In the case of both the 68 per cent band and the 62 per cent band, the factor that causes the rate to rocket is the withdrawal of benefits as income rises. Something similar affects the poor, whose benefits go off a cliff edge as they earn more money from work. People coming off income support lose 70p in every pound they earn. The upshot is an income tax system that looks like the graph above.

It’s possible that some people in those two highest tax bands are also paying the graduate tax, 9 per cent (student loan repayments aren’t called taxes, but that’s what they are). That would make the highest marginal rate of tax in the UK 77 per cent. It’s not as if there was ever a public conversation about this, or really any thought at all, along the lines of ‘the people we really want to squeeze are parents with three kids on £55,000 a year – let’s get ’em!’ It’s just collateral damage from an over-complicated system.

Dithering is a big part of the chaos too, and not just when it comes to income tax. ‘We have also suffered from the most absurd lack of consistency,’ Johnson writes.

With the same party in power since 2010 we have had corporation tax slashed from 26 per cent to 19 per cent followed by an announcement that it would return to 25 per cent, then that it wouldn’t, and then that it would … Even more than in any area of government spending there is no sense of strategy or direction … It has literally never published a strategy for tax.

VAT too. If you want a pet, get a rabbit – they’re 20 per cent cheaper than other pets because they don’t attract sales tax, being edible. If you want some chocolate, buy cooking chocolate – again, 20 per cent cheaper because no VAT. Let’s transition to green energy – but let’s pay tax on gas and coal at 5 per cent, not 20 per cent, because it would be unpopular if fuel VAT were higher, even though the government policy is a legally binding target of net zero by 2050.

The picture Johnson paints of government spending is also messy. In 2022-23, the state spent £1.18 trillion, a cool £218 billion more than it raised in tax. That shortfall is the annual deficit, which is added to the national debt, now standing at £2.5 trillion. That’s right: thirteen years after Osborne’s speech about the vital importance of holding debt-to-GDP below 90 per cent, the ratio is 100.6 per cent. The main targets of spending are health and social care at £180 billion (£153 billion of that for NHS England), pension age benefits at £135 billion, working age benefits and tax credits at £108 billion (£60 billion of which goes to households with at least one person in work), £150 billion on local government (including in the devolved nations), and £53.5 billion on schools.

Johnson draws several conclusions from this panoramic view. One, there is a ‘huge and ongoing bias in public policy towards protecting the older generations at the expense of the young’. Among other things, the pensions ‘triple lock’ – linking the state pension to whichever is the highest of inflation, average wage growth or 2.5 per cent – is unaffordable and indefensible. If inflation is 10 per cent one year, and wages rise by 10 per cent the next year in an attempt to catch up, pensions will have gone up 21 per cent. There is no plan that explains how we can continue to pay for this. Many people retire and are immediately richer than they ever were in full-time work. How can the state possibly afford that? Answer, it can’t – but buying pensioner votes in the short term is all that matters. State pensions are funded from current taxes. This means that young people are paying for levels of pension provision they will never be able to enjoy.

Two, the Beveridgian vision for public services has failed. It was axiomatic to Beveridge that work was a route out of poverty: if you were in work, you weren’t poor, by definition. But the majority of the poor are now in work, which means that in effect the state is subsidising low pay. In addition, Beveridge thought that bringing in a national health system would result in healthcare costs going down, because people’s health would improve and therefore demand would decrease. Let’s just say it didn’t work out like that. Johnson is no worshipper at the shrine of the NHS, and points out that for all the complaints about underfunding, it is actually roughly average for rich-country health spending, at about 10 per cent of national income. Outcomes, however, are below average across the board, especially for colon, breast, lung and prostate cancer, strokes and heart attacks. ‘On international comparisons the NHS is nothing special. It is particularly bad at keeping us alive.’ He doesn’t make allowance for the fact that the NHS is increasingly having to make up for gaps in social care, and is catching an increasing number of people who have fallen sick through failures elsewhere in the state.

This is the point at which the reader might be expecting a peroration, along the lines of: something something statistics, something something government, and then finally, Something That Must Be Done. But the story of data in UK governance doesn’t bring you to that place. Things go wrong here, as Sturge makes clear. But in general, we do pretty well with what we count, and have a fairly clear sense of how we raise and spend money. The big failure in the UK comes when it is clear what needs to be done, but politicians lack the courage to do it, because they are frightened of the electorate. Those voters in turn are reluctant to go along with any policy that imposes costs on them, however much those policies would be for the greater good. Council tax, which raises £42 billion, is based on rates last assessed in 1991. House values have changed unrecognisably in this period, with the result that people in richer parts of the country are paying tiny fractions of what an appropriate tax should be. A rate-payer in Kensington and Chelsea pays on average 0.1 per cent of the value of their home, one in Hartlepool on average 1.3 per cent – 13 times more. The planning system is heavily rigged against infrastructure and development, and as a result is a torturously slow, expensive, Nimby-prioritising obstacle to growth and, in particular, to equity between the generations. The cartoon version would be that rich greedy older people who own their own homes obsessively block developments that would favour the young – and it is, unfortunately, pretty accurate.

The state is repeatedly inconvenienced by its own planning system. A spectacular piece in the Daily Telegraph in June gave a classic example. Current projections and sentencing guidelines suggest the prison population will hit between 93,000 and 106,000 by 2027. There is nowhere to put them, because prisons are already full, with a current population of 85,851 and capacity for only 800 more. The obvious solution is to build more prisons. But three big prisons supposed to be under construction are blocked. The reason? Badgers. Just to be clear, I’m not in favour of the government’s criminal justice and prisons policy. But I think the reasons for preventing implementation of a democratically elected government’s criminal justice policy should not be to do with badger habitats.

One way of explaining how Britain got to this place is to say that we waited all this time for the worst prime minister in history, and then four came along at once. The irony is that the maddest and least competent of the Failed Four, Liz Truss, was right in her central point. Britain does desperately need economic growth. We are stuck, as Johnson, like others before him, points out, with an electorate that wants European levels of public services combined with American levels of taxation. We will never reconcile that tension, and it will continue to be as central to our politics in the decades ahead as it was in the decades behind. In order to begin to achieve even a version of what we want, we need economic growth. It’s the only thing that allows a government to raise increasing amounts of tax without squeezing the voters so hard that they rebel.

The menu of what to do is surprisingly simple. Stable, sane government: please, God, yes. (That’s the first item on Johnson’s list.) Planning desperately needs reform – it needs to be easier to build buildings and infrastructure. Tax needs reform: it is too complicated and full of distorted incentives. Our current pension system is unaffordable and unfair. Social care is in crisis, which puts the entire health system in crisis too. A new trading arrangement with the EU. A new settlement between the generations, getting off this track where the desperate young are strapped into harness to pay for the complacent old. In all these areas, we have done enough counting to know what needs to be done. We need political leaders who are willing to tell us things that we need to hear and we also, perhaps, need to grow up a bit: we need to listen to unwelcome truths. We might want to be somewhere else, but we are where we are.