The European Union is applying new legal restraints around artificial intelligence this year. The US is still trying to figure out how far it wants to go.

The European Parliament in December reached a provisional agreement on the world’s first comprehensive legislation to regulate AI, focusing on uses instead of the technology.

The new rules range in severity depending on how risky the application is, with facial recognition and certain medical innovations requiring approval before being made available to customers.

Federal laws specific to AI don’t exist yet in the US, and it’s unknown whether that will happen. The EU’s actions, however, could still have a chilling effect on companies based in this country.

“Any software- and data-technology-rich issue crosses borders so quickly,” intellectual property expert Gareth Kristensen, a partner with the law firm Cleary Gottlieb Steen & Hamilton, said.

The EU is throwing its weight around in other parts of the tech world too. Just this week, EU opposition to a union of two American tech companies — Amazon (AMZN) and robot vacuum maker iRobot (IRBT) — was enough for the firms to call off their $1.4 billion merger.

Not everyone in the US agrees new laws are needed to oversee AI. To date, Washington’s attempts to regulate the industry have proceeded slowly and on parallel tracks at the White House and Capitol Hill.

An executive order issued by President Biden last October directed AI developers and users to apply AI “responsibly.” Just this week, the administration announced new details there, including a new requirement for developers to disclose their safety test results to the government.

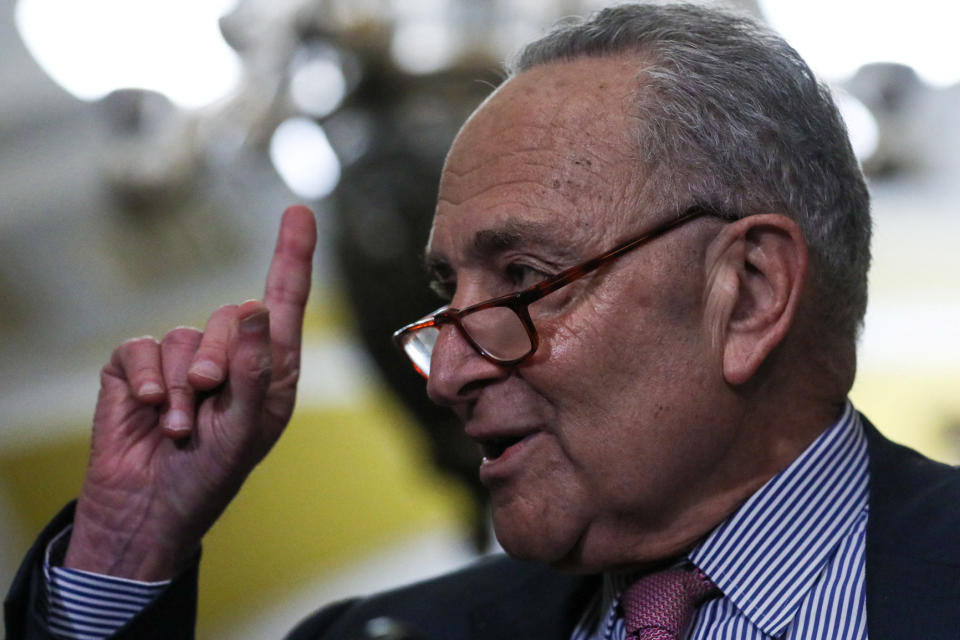

On Capitol Hill, meanwhile, Senate Majority Leader Chuck Schumer has overseen a series of roundtables — with figures like Elon Musk and others in attendance — to begin the process of drafting new laws around the topic.

He will be tasked with merging together a group of bipartisan bills under consideration. That includes one from Democratic Senator Gary Peters and Republican Senators Mike Braun and James Lankford to make it mandatory for US government agencies to disclose when they use AI for human interactions and to set in place an appeals process to allow individuals to object to AI-generated decisions.

In another bill introduced in June, Democratic Senators Michael Bennet and Mark Warner joined Republican Senator Todd Young in proposing an office of global competition analysis that would be tasked with helping the US maintain global leadership in AI.

Democratic Senator Richard Blumenthal and Republican Senator Josh Hawley also announced their own framework for AI legislation. They proposed that the US establish an independent licensing and oversight organization, along with AI transparency requirements and legal liability for harms caused by the technology.

There’s no guarantee that any of this new US legislation will come to pass.

“The US is a very free market, and [it’s] difficult to get bipartisan consensus on a federal level,” Kristensen said.

‘Proactive rather than reactive’

The US, deliberately, hasn’t gone the way of the EU, said Ryan Abbott, a technology-focused intellectual property attorney and medical doctor. America’s comparatively more market-driven “less regulation is more” philosophy, he said, could lead lawmakers to police AI using existing IP, copyright, and trademark laws.

“There’s a whole other view of this, which is that you really don’t need something comprehensive because, really, existing rules cover AI regulation,” Abbott added.

Intellectual property law governs patents, copyrights, and trademarks. And tort law protects individuals from harmful products placed on the market.

Abbott, however, recommended in testimony before the Senate Judiciary Committee last June that lawmakers should at least consider amending intellectual property laws so that AI-generated inventions are clearly patentable. Under current US law, only human inventors are eligible to obtain a patent.

Musk — one of the original backers of OpenAI and creator of chatbot ChatGPT — has said that perhaps a new federal department is needed to stay on top of the risks. That’s what he told reporters after meetings on Capitol Hill in September.

“The reason that I’ve been saying that before, about the whole AI safety in advance of sort of anything terrible happening, is that consequences of AI going wrong are severe. So we have to be proactive rather than reactive,” Musk said last September.

White House national economic advisor Lael Brainard and acting Labor Secretary Julie Su said in an op-ed piece published in Yahoo Finance Tuesday that the Labor Department is creating a set of principles for “the responsible, worker-centric use of AI” while the administration prepares “detailed policy recommendations to protect and support workers against potential AI-related job displacement.”

A similar approach is unfolding in the United Kingdom. That nation’s government is now advocating for companies to voluntarily comply with key principles that its lawmakers set out in a white paper published last year. The UK is expected, for now, to empower its regulators to apply existing law to AI, and base potential legislation depending on future levels of compliance.

China has adopted more politically focused AI laws. Those requirements target AI-generated news distribution, deep fakes, chatbots, and datasets. To date, they are much less comprehensive than the EU’s AI Act.

Kristensen estimated that the EU’s new AI Act is likely to take effect over the next six to 24 months as members of the 27-nation bloc sign off on the final text.

Some members, including the French government, have been rumored to be exploring whether to revise and push back on certain parts of the act.

Whatever final regulations each government adopts, Abbott said, will play a big role in how AI is developed and used from jurisdiction to jurisdiction.

“There’s sort of a perception that there’s an AI arms race right now, internationally.”

Alexis Keenan is a legal reporter for Yahoo Finance. Follow Alexis on Twitter @alexiskweed.

Click here for the latest technology news that will impact the stock market.

Read the latest financial and business news from Yahoo Finance